By Ryan Falkenberg

Greater automation can help staff improve clients’ experience, at a time when increasingly onerous regulations are turning staff into liabilities

In the financial services sector in particular, compliance is a complex issue. You can train people, and retrain them, but this is time consuming and costly. And even once trained, how do you ensure they act in accordance with the relevant legislation and regulation? And how do you prove they did so? In a court of law if necessary?

Financial services companies are under pressure to reduce their cost structures, yet with more informed customers come more complex service requests. Product, policy and procedural complexity is increasing and, along with increasing legislative oversight, the pressure on service staff to become deep content specialists is mounting. This has cost implications because these companies are required to train and maintain a pool of deep specialists who cannot be deployed across multiple product lines.

Further, expecting staff to be walking, talking, legislative and policy encyclopedias isn’t realistic and distracts them from their actual role of providing the customer service that is becoming increasingly important in the digital era.

The problem, simply put, lies with how we train people and how we expect them to consistently interpret and apply generic information to complex situations.

Traditional skills development models continue to be constrained by industrial-era thinking, which was influenced mainly by the need to develop a compliant workforce. So the way we teach people involves focusing on transferring known knowledge into their heads, with tests validating that the data has been successfully transferred.

The challenge is that people often learn using short-term memory, and so while they may pass the test, they tend to forget much of what is learned. In addition, what they are taught is changing so quickly that they soon find themselves outdated and vulnerable.

In a compliance scenario, this is potentially disastrous. It also inhibits people — they end up scared to do anything in case they get it wrong, do not perform optimally, and their job satisfaction and productivity decline.

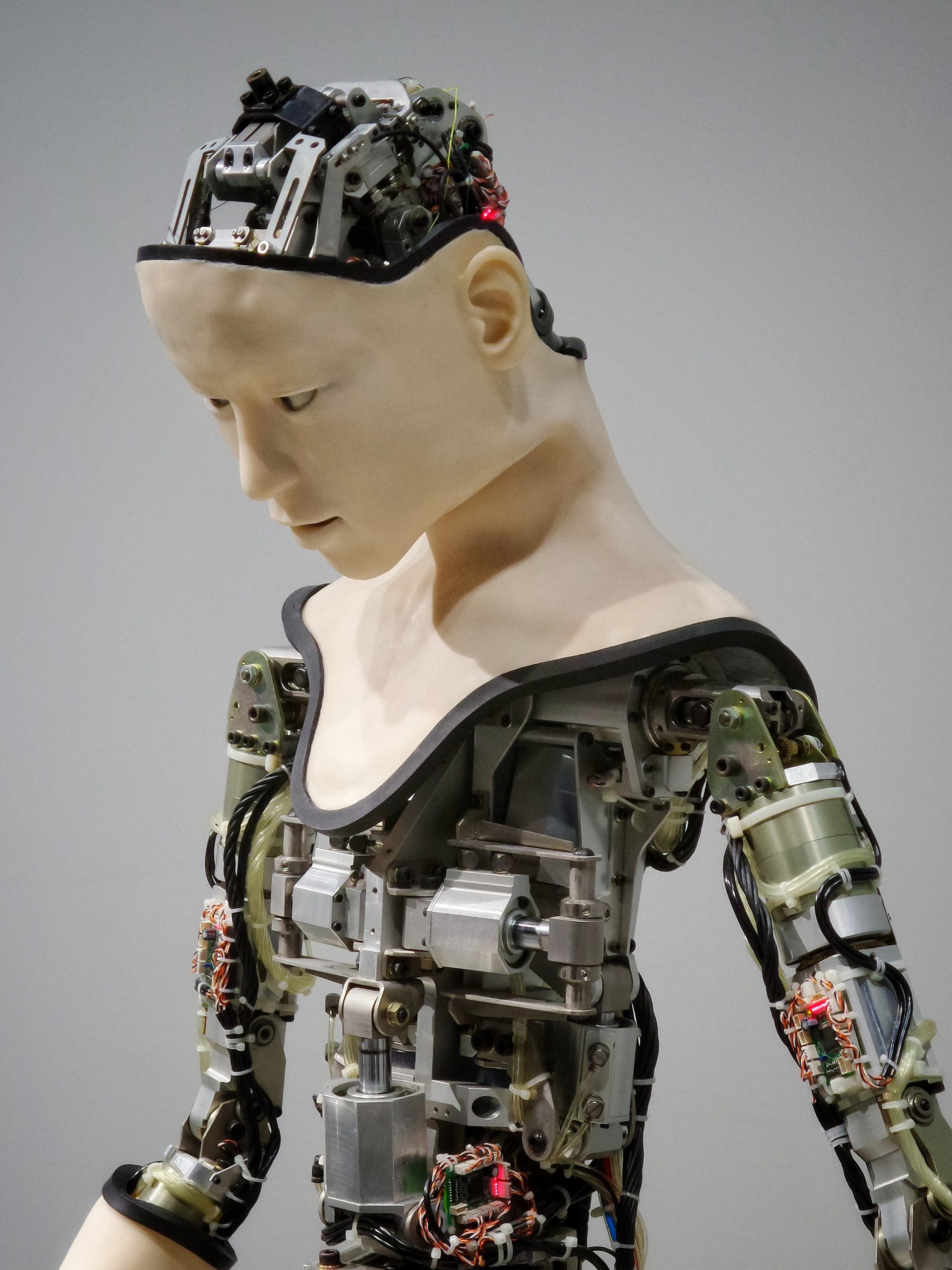

While AI is threatening jobs, it also offers an opportunity to augment people and assist them to do their jobs better. In the case of compliance, it is possible to develop decision navigators that guide people through prescribed processes — for example, selling a new insurance policy — in real time, in a way that ensures they always get it right, and is verifiable and forensically auditable. AI can take into account the customer’s context and guide the employee to ask the right questions to diagnose the right problems and identify the right solutions — in this case an insurance policy — to meet the customer’s need.

This means people are no longer required to simply replicate the historically known and prescribed decisions and actions detailed in products, policies, systems and procedures — AI can handle this for them, freeing them up to focus on having meaningful customer conversations that translate into improved, more compliant sales.

This will enable financial services companies to have a pool of staff that is flexible, and able to handle multiple product lines, for example, without being specialist in any of them.

In call centres in particular this is becoming increasingly important as the drive to create super-agents, capable of handling a wider band of calls, intensifies (again due to cost pressures).

In effect, deploying decision navigators means getting more out of existing staff, with less training and less risk of decision errors.

Decision navigators can also be built and maintained by noncoders, which means businesses don’t have to rely on IT to capture the expertise they want every staff member to apply in every known situation. And the fact that they are based on data, not decision tree logic, means they can consider any combination of situational factors, and can apply different rules at different times, based on the regulatory framework being applied. They can also be integrated into third-party systems to ensure, as they are required, that they consider all data available to them in guiding staff to the correct decision and action.

The result is that financial companies can now turn staff into customer experience and compliance assets, while the regulatory environment is turning many staff into potential liabilities — and hence driving much of the focus towards automation.

© BusinessLIVE MMXVII